On "Present Rate No Singularity"

Sorry, people who aren't in the Bay Area, it's ingroup bullshit time

I have observed and participated in an irritating number of conversations of the form:

Alice: So that’s why I think the ultimate effect of this legislation on housing prices in California is going to be positive.

Bob: But we’re not going to have time to build new apartment buildings before we’re all dead.

Alice: In the next thirty or forty years we’re going to realize that marijuana legalization was a huge mistake.

Bob: Well, obviously! The transformative AI is going to tell us if marijuana legalization is good or not.

Alice: I’m curious about your thoughts about the replication crisis.

Bob: So, assuming that we don’t turn into grey goo, I see a lot of reason for optimism.

Alice: I’ve sold the first novel in my ten-book series!

Bob: Aren’t you worried that large language models are going to automate writing novels by the time you’re done with book three?

Alice: I see you’ve taken up exercising!

Bob: Well, I’m convinced of the longevity benefits— I mean, assuming that we’re not all going to die long before it’s relevant.

Alice: My child wants to be an engineer when he grows up.

Bob: By the time he grows up humans won’t have jobs. That is, if we’re not all extinct.

I would like to witness fewer conversations of this sort.

I’ve seen some people say “present rate no singularity” to mean “making the perhaps implausible assumption that the future is going to continue basically the same way the past did, perhaps with some new technologies but not with anything earthshakingly bizarre.” I think that this term should be more widely known, so that people can say “present rate no singularity” (or type “PRNS”) in order to establish that this conversation taking place assumes that humanity thirty years from now will be alive, made of flesh, and employed. Then we will all have to put in less mental energy to think of new variations on “if there is an AI winter.”

I also think that, even if it is not specified, most conversations about the future should be assumed to be operating on present-rate-no-singularity, for several reasons.

First: it is really depressing to think about how we are all going to die. People should be able to talk about their novels, exercise habits, and children’s futures without thinking about how perilously close we are to extinction.

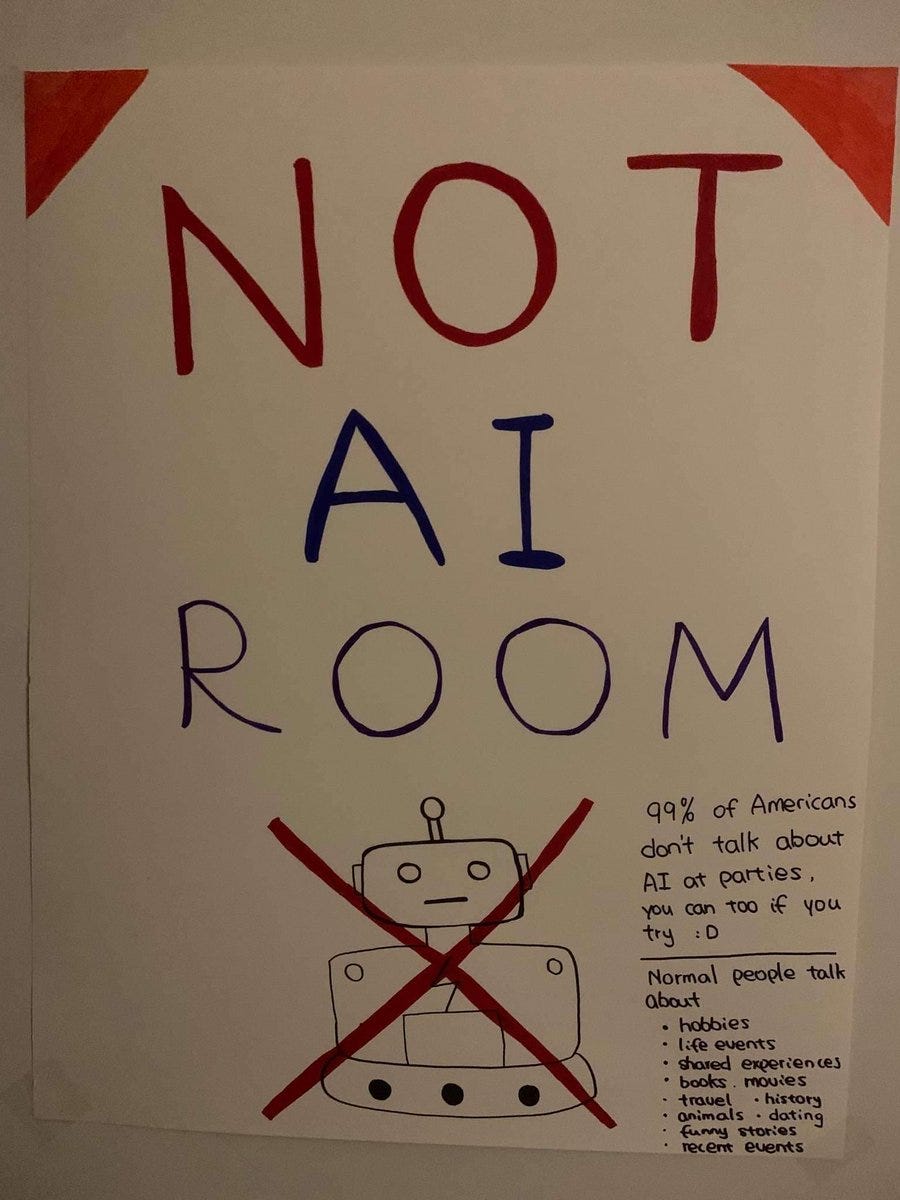

Second: not operating on present-rate-no-singularity transforms every conversation that mentions the future to be about AI. As strange as it may seem in the Bay Area effective altruist community, many people know nothing about AI, and yet have many interesting and intelligent things to say about topics other than AI. Alice might know a lot about the California housing crisis, even if she doesn’t have the domain-specific knowledge about AI to be able to predict how transformative AI will affect it.1 If you want to learn things about areas other than AI, it helps to be able to set AI aside for a bit and learn from other people’s area of expertise.

Even if you don’t want to learn about anything other than AI (because it is all irrelevant because we’re all going to die), presumably you sometimes want to talk about hobbies and children and marriages and other non-AI life projects people might have. In order to do so, you should not go “we’re all going to die!” any time someone mentions something more than five years off.

Third: all effective altruist conversations about the future being about AI is really unwelcoming to effective altruists with long AI timelines. This is the one I’m going to complain about the most because it affects me personally. People with long AI timelines are preparing for a future without AI; effective altruists with long AI timelines usually work on something that isn’t AI, often something that will take years or decades to pay off.

The obvious response, if someone brings up AI, is to go “well, I have long timelines,” at which point your interlocutor will immediately start arguing with you about AI risk. Imagine the way you would feel, people with short AI timelines, if every time you had a conversation about your work it was like:

Bob: blah blah blah blah transformer architecture

Alice: But that doesn’t matter because Catholicism teaches that machines can’t have rational souls, so they won’t ascend to superintelligence.

This would get really fucking annoying. Even if you like arguing about Catholicism, sometimes you want to talk about new machine learning papers and not about St. Thomas Aquinas’s Five Ways.

Even if the person with long timelines says “so, uh, right. Anyway” and continues their conversation, it sucks. I can’t speak for everyone with long timelines, but for me it feels like “friendly reminder! Your community and everyone you care about thinks your life work is pointless! The movement you’ve devoted your life to has put all its eggs in a single basket and the basket has an enormous hole in it and you’re terrified that it’s going to waste all its potential and the world will be worse in ways that could have been prevented!”2

Over time, the path of least resistance is either to drift away or to agree “yep! Short AI timelines!” whether or not you understand the arguments in favor. That way lies groupthink, claims that aren’t subject to proper scrutiny because everyone just knows they’re true, and misallocation of altruistic resources.

A lot of people I know have contempt for climate doomers—the people who believe we’re headed to a horrifying dystopia because of climate change, so they’re severely depressed and anxious, don’t want to have children because life won’t be worth living for future generations, and are willing to sacrifice anything else that humans value in the hopes that we might be able to avert the apocalypse. They are so trapped in their doom cycles that they can’t accept the scientific consensus that global warming is really bad but will not lead to an apocalyptic dystopia where no human lives are worth living.

This kind of thing is how you become climate doomers. You think all the time about how scary the future is going to be. You stop having conversations about things other than our future doom. Even the minutia of daily life start being about the doom. You drive away the people who agree that the problem is bad but disagree about how bad it is. It’s bad for your mental health, it’s bad for the movement, stop it.

I don’t think that this one norm change is going to totally solve the problem. But I do think it will help. Present rate no singularity. Tell your friends.

Once we have all become uploads, all of the solar system becomes a Dyson sphere except for a small chunk of Berkeley which is still going through the planning process to build a four-story apartment building. With all the atoms of the earth rearranged into supercomputers running trillions of gloriously fulfilled sentient beings and therefore nothing for the building to cast a shadow on, it’s difficult to figure out how to complete the shade assessment.

I realize these things are true but, perhaps unvirtuously, I would prefer that it mostly not be brought up.

I strongly agree, obviously (https://ealifestyles.substack.com/p/i-dont-want-to-talk-about-ai)

As an added incentive, if people were less annoying about it, I'd be more open to reading about AI!

I know that this is not the main point of the article but

> our community and everyone you care about thinks your life work is pointless!

I know basically nothing about Wild Animal Suffering, but whenever I am sad about plans to cull animals in my city, because of ways they have inconvenienced humans, I feel comforted that there are some people who are doing work to learn how we can improve the lives of wild animals.